Alibaba Group has introduced the revolutionary QwenLong-L1 architecture that enables large language models to efficiently process extremely long documents. The new technology opens possibilities for analyzing corporate reports, financial statements, and legal contracts of any size.

G. Ostrov

Alibaba Group has introduced QwenLong-L1 — a revolutionary architecture that solves one of the main problems of modern language models: the inability to efficiently work with long texts. This breakthrough could fundamentally change the approach to analyzing large documents in corporate environments.

The Long Context Problem

Until recently, large reasoning models (LRM) faced serious limitations when working with long texts. Although reinforcement learning significantly improved their task-solving skills, model efficiency dropped sharply when processing texts exceeding 4,000 tokens.

This limitation hindered practical applications of LRM in areas requiring work with extensive knowledge bases — scientific research, jurisprudence, financial analysis, and corporate document management.

QwenLong-L1's Innovative Approach

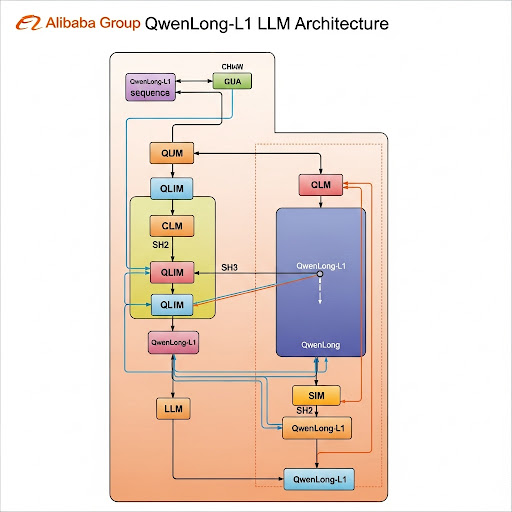

The key advantage of QwenLong-L1 lies in its multi-stage training approach, which includes three main phases:

1. Supervised Fine-Tuning (SFT)

In the first stage, the model learns from reasoning examples with long contexts, creating a foundation for accurate information extraction from large data volumes.

2. Progressive Reinforcement Learning

In the second stage, the length of input documents is gradually increased, ensuring stable model adaptation to more complex tasks. This approach avoids sharp performance drops.

3. Complex Example Selection

The final stage uses the most difficult examples from previous phases, stimulating the model to master the most challenging tasks and explore different reasoning paths.

Hybrid Reward System

QwenLong-L1 uses an innovative hybrid reward system that combines:

- Strict rule-based verification to guarantee accuracy

- Evaluation by another LLM that compares the semantic content of responses

This approach allows more flexible processing of various correct answer variants characteristic of long and complex documents.

Impressive Test Results

Testing QwenLong-L1 on seven benchmark datasets for document question-answering tasks showed outstanding results:

- QwenLong-L1-32B — performance comparable to Anthropic's Claude-3.7 Sonnet Thinking, surpasses OpenAI o3-mini and Qwen3-235B-A22B

- QwenLong-L1-14B — outperformed Google Gemini 2.0 Flash Thinking and Qwen3-32B

Specialized Skills

Training with QwenLong-L1 led to the development of specialized skills for working with long contexts:

- Better answer "grounding" — linking to specific document parts

- Setting intermediate goals

- Error tracking and correction

- Answer verification

Open Access and Applications

Alibaba has released QwenLong-L1 code and trained model weights as open source, opening wide possibilities for application in various fields:

- Legal sector — contract and legislation analysis

- Finance — processing reports and analytical materials

- Corporate sector — working with internal documentation

- Scientific research — analyzing large datasets

This Alibaba achievement could become a turning point in artificial intelligence development, making long document analysis accessible and efficient for a wide range of tasks.

More detailed information can be found on the official Alibaba Group website.

In case of any problems, write to us, we will help quickly and efficiently!