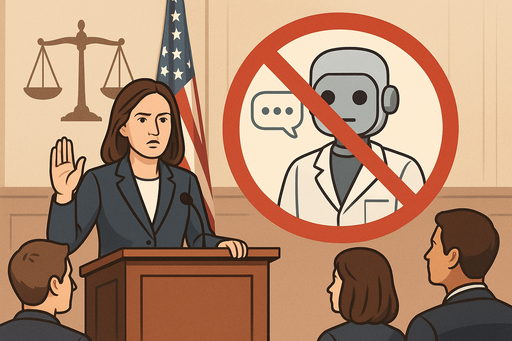

American lawmakers are actively introducing restrictions on the use of artificial intelligence in mental health. The reason is dangerous cases involving minors after communicating with AI therapists.

G. Ostrov

American authorities have begun actively regulating the use of artificial intelligence in mental health. Legislative initiatives aim to protect users, especially minors, from potentially dangerous advice from AI assistants.

Dangerous Cases with AI Therapists

The American Psychological Association has documented at least two cases of dangerous behavior among minors after communicating with chatbots. In one incident, a teenager attacked their parents following artificial intelligence recommendations. These incidents became a catalyst for adopting strict regulatory measures.

Restrictions in Three States

Currently, strict restrictions have been introduced in three American states:

Utah (May 2025)

Utah state adopted comprehensive regulatory measures:

- Mental health chatbots must clearly and regularly warn users that they are communicating with AI

- Using users' personal data for targeted advertising is prohibited

- AI can only perform administrative tasks

- Mental state analysis and recommendations are possible exclusively under licensed specialist supervision

Nevada (July 2025)

Nevada state introduced the strictest restrictions:

- Complete ban on advertising and selling "virtual therapist" services

- Licensed psychologists cannot use AI directly in therapeutic practice

- Artificial intelligence is allowed only for administrative purposes

- Violations carry fines up to $15,000

Illinois (August 2025)

Illinois state also adopted strict measures:

- AI is categorically prohibited from conducting therapeutic sessions

- Artificial intelligence cannot provide medical recommendations or make diagnoses

- Even advertising AI therapy services is banned

- Violation fine is $10,000

Initiative Expansion

At least three additional states — California, Pennsylvania, and New Jersey — are in the process of developing similar legislative acts. This indicates growing understanding of the problem's seriousness at the federal level.

Conclusion

The ban on AI therapists in the USA reflects justified concern for user safety. Despite artificial intelligence's potential in healthcare, mental health requires particularly delicate approach and professional competence that modern chatbots do not yet possess.

More detailed information about AI regulation in healthcare can be found on the official website of the American Psychological Association.

In case of any problems, write to us, we will help quickly and efficiently!