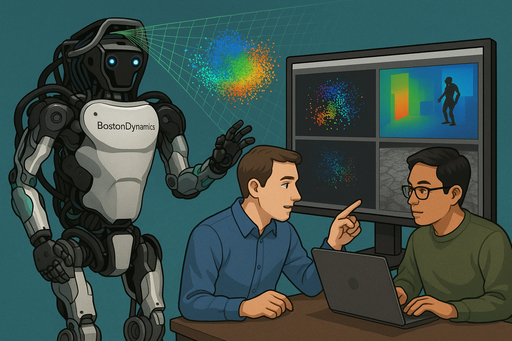

Boston Dynamics has revealed the intricate details of how their humanoid robot Atlas perceives and interacts with the world. The robot can perform complex manufacturing tasks thanks to an advanced computer vision and machine learning system that enables precise object identification and real-time interaction.

G. Ostrov

Executing complex tasks in modern manufacturing requires robots to have a deep understanding of the geometric and semantic properties of surrounding objects. Boston Dynamics engineers have presented a detailed overview of how their humanoid robot Atlas "sees" the world through a flexible and adaptive perception system.

The Complexity of Simple Tasks

Even seemingly simple tasks — such as picking up an automotive part and installing it in the correct location — break down into multiple stages, each requiring extensive knowledge of the surrounding environment. Atlas must first detect and identify the object, which is particularly challenging when working with shiny or low-contrast parts.

After identification, the robot determines the precise location of the object for grasping. The object may be located on a table, in a container with limited space, or in other challenging conditions. Atlas then decides on the installation location and method of part delivery.

Centimeter-Level Precision

A critically important aspect of Atlas's operation is the high precision of object placement. Deviation of even a couple of centimeters can result in incorrect installation or part dropping. To prevent such situations, the robot is equipped with a computer vision-based action correction system.

Perception System Architecture

Atlas's perception system includes:

- Precisely calibrated sensors

- Advanced AI algorithms with machine learning

- Environmental state assessment system

- Object detection module

The detection system provides object data in the form of identifiers, bounding boxes, and points of interest. When working in automotive manufacturing, Atlas detects shelves of various shapes and sizes, determining not only their type but also precise location to avoid collisions.

Key Points and Localization

The robot uses two types of two-dimensional pixel points:

- External points (green) — objects that should be navigated around

- Internal points (red) — more diverse points reflecting shelf distribution, box locations, and enabling precise object localization

For object classification and interest point location prediction, Atlas uses a lightweight network architecture that ensures balance between performance and perception accuracy.

SuperTracker System

Atlas's manipulation skills are based on the SuperTracker position tracking system, which combines various information streams: robot kinematics, computer vision, and other data. Kinematic information from joint encoders allows determining the location of grippers in space.

When an object is in the field of view, Atlas uses a position estimation model trained on a large dataset of synthetic data. The model can work with new objects using their CAD models for precise positioning.

Future Development

Boston Dynamics plans to further improve Atlas's action precision and adaptability. The team is working on creating a unified base model where perception and action become integrated processes rather than separate systems.

Official Boston Dynamics website: www.bostondynamics.com

If you encounter any problems, please contact us — we'll help quickly and efficiently!